Sand Balls Mechanics Implementation: The Best Way To Deform A Mesh In Unity — Part II: MeshData And Optimization

Part I: Sand Balls Mechanics Implementation: The Best Way To Deform A Mesh In Unity

Part II: this post

In this post, we will continue to learn how to deform a mesh in Unity using a real-world example. You will learn how to use MeshData API and then it will be compared to other techniques from the previous post. We will check each approach by using performance testing to find the fastest one. In addition, we will address issues raised before based on test results to achieve a smoother user experience.

Table Of Contents

MeshData Implementation

MeshData API allows working with meshes inside jobs. It was first added in Unity 2020.1. Let’s check if it is a suitable technique for the mechanic.

To acquire the data of the current mesh we use Mesh.AcquireReadOnlyMeshData(_mesh) and then create an output data by calling Mesh.AllocateWritableMeshData(1).

_output = Mesh.AllocateWritableMeshData(1);

_input = Mesh.AcquireReadOnlyMeshData(_mesh);

We can then pass these structs into a job.

The job looks similar to the previous technique, but here we don’t pass the data buffer NativeArray<VertexData> _vertexData that was used to modify and apply data to a mesh each time deformation was invoked. Now we can use input and output MeshData. We get vertex data from both and assign modified input data to the output. Simple as that.

[BurstCompile]

public struct ProcessMeshDataJob : IJobParallelFor

{

private static readonly Vector3 Up = new Vector3(0, 1, 0);

[ReadOnly] private readonly Mesh.MeshData _outputData;

[ReadOnly] private readonly Mesh.MeshData _vertexData;

[ReadOnly] private readonly float _radius;

[ReadOnly] private readonly float _power;

[ReadOnly] private readonly Vector3 _point;

public ProcessMeshDataJob(Mesh.MeshData vertexData,

Mesh.MeshData outputData,

float radius,

float power,

Vector3 point)

{

_vertexData = vertexData;

_outputData = outputData;

_radius = radius;

_power = power;

_point = point;

}

public void Execute(int index)

{

var vertexData = _vertexData.GetVertexData<VertexData>()[index];

var outputVertexData = _outputData.GetVertexData<VertexData>();

var position = vertexData.Position;

var distance = (position - _point).sqrMagnitude;

var modifier = distance < _radius ? 1 : 0;

outputVertexData[index] = new VertexData

{

Position = position - Up * modifier * _power,

Normal = vertexData.Normal,

Uv = vertexData.Uv

};

}

}

You might wonder why the job calls GetVertexData for every vertex, but here we just get a pointer into the raw vertex buffer data with no memory allocations, conversions, or data copies. And in my case, there was absolutely no difference between this code and when I tried to call GetVertexData on the main thread and pass NativeArrays as arguments into the job.

An output MeshData must also have its index data set. However, when I tried to do it via the job by calling GetIndexData<ushort>() and filling it with data I had a weird behavior or even editor crashes on 2021.3.0f1. So I used the following approach that doesn’t affect performance much as it is basically a memcpy call:

private void UpdateMesh(Mesh.MeshData meshData)

{

var outputIndexData = meshData.GetIndexData<ushort>();

_meshDataArray[0].GetIndexData<ushort>().CopyTo(outputIndexData);

_meshDataArray.Dispose();

...

}

It is important to dispose a MeshDataArray struct, as modifying a Mesh while a MeshDataArray struct for that Mesh exists results in memory allocations and data copies.

Now let’s compare how it performs using the same performance test from Part I.

Configurations are still the same: Android OnePlus 9 (Snapdragon 888) VSync is forced on.

| Method | Median | Dev | StdDev |

|---------------------------------------------------|---------:|---------:|---------:|

| DeformableMeshPlane_ComputeShader_PerformanceTest | 8,27 ms | 0,43 ms | 3,53 ms |

| DeformableMeshPlane_Naive_PerformanceTest | 8,27 ms | 0,39 ms | 3,25 ms |

| DeformableMeshPlane_NaiveJob_PerformanceTest | 8,27 ms | 0,19 ms | 1,58 ms |

| DeformableMeshPlane_MeshData_PerformanceTest | 8,27 ms | 0,19 ms | 1,58 ms |Standalone windows build running on:

Intel Core i7-8750H CPU 2.20GHz (Coffee Lake), 1 CPU, 12 logical and 6 physical cores

NVIDIA GeForce GTX 1070| Method | Median | Dev | StdDev |

|---------------------------------------------------|---------:|---------:|---------:|

| DeformableMeshPlane_ComputeShader_PerformanceTest | 1,45 ms | 3,91 ms | 5,66 ms |

| DeformableMeshPlane_Naive_PerformanceTest | 15,37 ms | 0,11 ms | 1,65 ms |

| DeformableMeshPlane_NaiveJob_PerformanceTest | 6,76 ms | 0,88 ms | 5,97 ms |

| DeformableMeshPlane_MeshData_PerformanceTest | 6,72 ms | 0,83 ms | 5,60 ms |The results are similar to the previous technique that uses the job system. While the implementation is more complex.

As usual source code is available on GitHub JobDeformableMeshDataPlane.cs as well as below:

[RequireComponent(typeof(MeshFilter), typeof(MeshCollider))]

public class JobDeformableMeshDataPlane : DeformablePlane

{

[SerializeField] private int _innerloopBatchCount = 64;

private Mesh _mesh;

private MeshCollider _collider;

private Vector3 _positionToDeform;

private Mesh.MeshDataArray _meshDataArray;

private Mesh.MeshDataArray _meshDataArrayOutput;

private VertexAttributeDescriptor[] _layout;

private SubMeshDescriptor _subMeshDescriptor;

private ProcessMeshDataJob _job;

private JobHandle _jobHandle;

private bool _scheduled;

private bool _hasPoint;

private void Awake()

{

_mesh = gameObject.GetComponent<MeshFilter>().mesh;

CreateMeshData();

_collider = gameObject.GetComponent<MeshCollider>();

}

private void CreateMeshData()

{

_meshDataArray = Mesh.AcquireReadOnlyMeshData(_mesh);

_layout = new[]

{

new VertexAttributeDescriptor(VertexAttribute.Position,

_meshDataArray[0].GetVertexAttributeFormat(VertexAttribute.Position), 3),

new VertexAttributeDescriptor(VertexAttribute.Normal,

_meshDataArray[0].GetVertexAttributeFormat(VertexAttribute.Normal), 3),

new VertexAttributeDescriptor(VertexAttribute.TexCoord0,

_meshDataArray[0].GetVertexAttributeFormat(VertexAttribute.TexCoord0), 2),

};

_subMeshDescriptor =

new SubMeshDescriptor(0, _meshDataArray[0].GetSubMesh(0).indexCount, MeshTopology.Triangles)

{

firstVertex = 0, vertexCount = _meshDataArray[0].vertexCount

};

}

public override void Deform(Vector3 positionToDeform)

{

_positionToDeform = transform.InverseTransformPoint(positionToDeform);

_hasPoint = true;

}

private void Update()

{

ScheduleJob();

}

private void LateUpdate()

{

CompleteJob();

}

private void ScheduleJob()

{

if (_scheduled || !_hasPoint)

{

return;

}

_scheduled = true;

_meshDataArrayOutput = Mesh.AllocateWritableMeshData(1);

var outputMesh = _meshDataArrayOutput[0];

_meshDataArray = Mesh.AcquireReadOnlyMeshData(_mesh);

var meshData = _meshDataArray[0];

outputMesh.SetIndexBufferParams(meshData.GetSubMesh(0).indexCount, meshData.indexFormat);

outputMesh.SetVertexBufferParams(meshData.vertexCount, _layout);

_job = new ProcessMeshDataJob

(

meshData,

outputMesh,

_radiusOfDeformation,

_powerOfDeformation,

_positionToDeform

);

_jobHandle = _job.Schedule(meshData.vertexCount, _innerloopBatchCount);

}

private void CompleteJob()

{

if (!_scheduled || !_hasPoint)

{

return;

}

_jobHandle.Complete();

UpdateMesh(_meshDataArrayOutput[0]);

_scheduled = false;

_hasPoint = false;

}

private void UpdateMesh(Mesh.MeshData meshData)

{

var outputIndexData = meshData.GetIndexData<ushort>();

_meshDataArray[0].GetIndexData<ushort>().CopyTo(outputIndexData);

_meshDataArray.Dispose();

meshData.subMeshCount = 1;

meshData.SetSubMesh(0,

_subMeshDescriptor,

MeshUpdateFlags.DontRecalculateBounds |

MeshUpdateFlags.DontValidateIndices |

MeshUpdateFlags.DontResetBoneBounds |

MeshUpdateFlags.DontNotifyMeshUsers);

_mesh.MarkDynamic();

Mesh.ApplyAndDisposeWritableMeshData(

_meshDataArrayOutput,

_mesh,

MeshUpdateFlags.DontRecalculateBounds |

MeshUpdateFlags.DontValidateIndices |

MeshUpdateFlags.DontResetBoneBounds |

MeshUpdateFlags.DontNotifyMeshUsers);

_mesh.RecalculateNormals();

_collider.sharedMesh = _mesh;

}

}

How To Improve Performance?

Looking at the results you might wonder why we need to improve performance if the compute shader implementation has a median time of 1.45 ms. However, as discussed in Part I: Performance Tests the compute shader takes 4 frames to compute the deformation and return the result back to the CPU. So we have 3 really short frames, then 1 that has a mesh baking taking around 10 ms. And we definitely want a mesh with higher resolution to cover a bigger level, as well as having smoother edges when deformed. Given that baking might be a real performance issue.

Mesh Resolution Scalability

Let’s create another performance test to check how baking degrades with vertex count.

So here are the results on a mobile device (OnePlus 9, Snapdragon 888, VSync on (forced), 120Hz refresh rate):

| Method | Median | Dev | StdDev |

|-----------------------------------------------|---------:|---------:|---------:|

| DeformableMeshPlane_MeshResolutionTest(121) | 8,27 ms | 0,25 ms | 2,05 ms |

| DeformableMeshPlane_MeshResolutionTest(441) | 8,27 ms | 0,10 ms | 0,79 ms |

| DeformableMeshPlane_MeshResolutionTest(1681) | 8,27 ms | 0,09 ms | 0,78 ms |

| DeformableMeshPlane_MeshResolutionTest(6561) | 8,27 ms | 0,13 ms | 1,10 ms |

| DeformableMeshPlane_MeshResolutionTest(25921) | 24,83 ms | 0,16 ms | 4,07 ms |

| DeformableMeshPlane_MeshResolutionTest(40401) | 33,11 ms | 0,12 ms | 4,01 ms |

And results on a PC (VSync off)

Intel Core i7-8750H CPU 2.20GHz (Coffee Lake), 1 CPU, 12 logical and 6 physical cores NVIDIA GeForce GTX 1070

| Method | Median | Dev | StdDev |

|-----------------------------------------------|---------:|---------:|---------:|

| DeformableMeshPlane_MeshResolutionTest(121) | 1,31 ms | 2,91 ms | 3,80 ms |

| DeformableMeshPlane_MeshResolutionTest(441) | 1,65 ms | 0,59 ms | 0,97 ms |

| DeformableMeshPlane_MeshResolutionTest(1681) | 3,67 ms | 0,40 ms | 1,48 ms |

| DeformableMeshPlane_MeshResolutionTest(6561) | 10,95 ms | 0,15 ms | 1,68 ms |

| DeformableMeshPlane_MeshResolutionTest(25921) | 42,47 ms | 0,13 ms | 5,50 ms |

| DeformableMeshPlane_MeshResolutionTest(40401) | 63,08 ms | 0,07 ms | 4,53 ms |

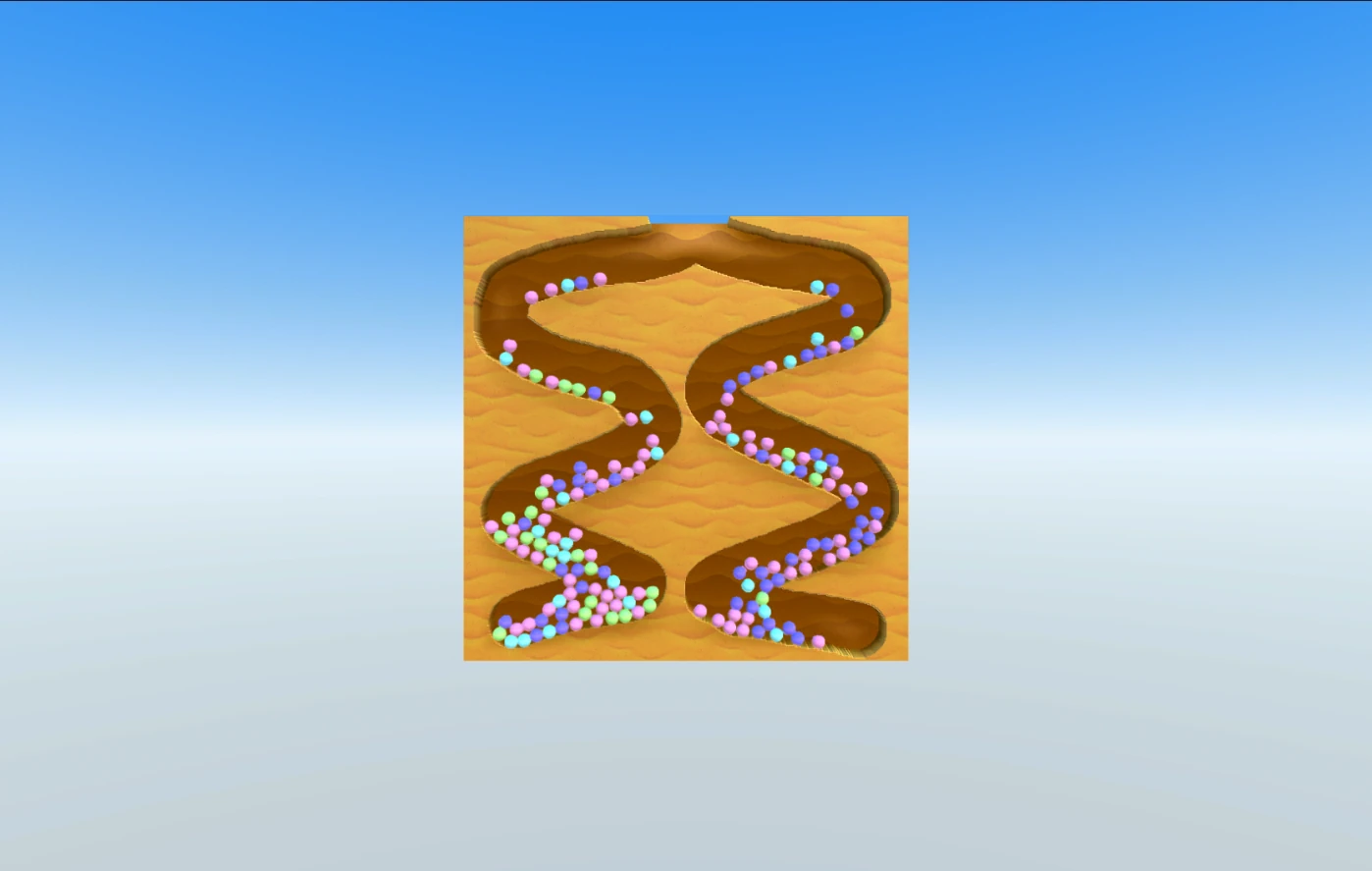

We can significantly improve performance by replacing a single plane mesh with a group of smaller planes. It allows us to make smoother edges too by increasing the overall polygon density, as most vertices on a level won’t be affected during a particular frame therefore the total number of vertices baked each frame decreases.

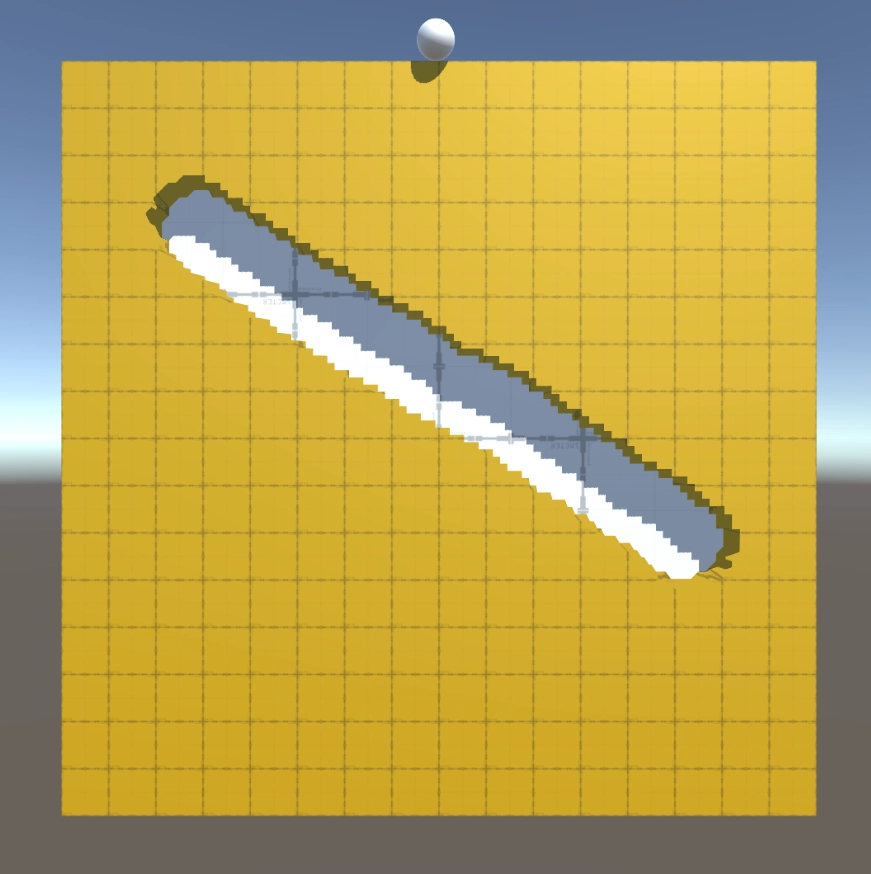

Here is an example of an edge with a mesh I used for performance tests to compare different deformation techniques:

Now compare it to a level of the same size that consists of 16 planes, but now each plane is 20k polygons:

Looks a lot better with relatively the same performance, while having a lot of flexibility to tune performance vs polygon density.

I used the composite pattern to add multiple plane support to my sample. It allowed me to leave all the other code completely unchanged. It accepts an input position and compares a square distance to each plane to decide whether it should be deformed or not.

public class DeformablePlanesComposite : DeformablePlane

{

[SerializeField] private DeformablePlane[] _deformablePlanes;

[SerializeField] private float _distanceThreshold = 1f;

private Bounds[] _bounds;

private Vector3 _positionToDeform;

private void Awake()

{

_bounds = new Bounds[_deformablePlanes.Length];

for (var i = 0; i < _deformablePlanes.Length; i++)

{

_bounds[i] = _deformablePlanes[i].GetComponent<Collider>().bounds;

}

}

public override void Deform(Vector3 positionToDeform)

{

_positionToDeform = positionToDeform;

for (var i = 0; i < _deformablePlanes.Length; i++)

{

var deformablePlane = _deformablePlanes[i];

var bounds = _bounds[i];

var sqrDistance = bounds.SqrDistance(_positionToDeform);

if (sqrDistance < _distanceThreshold)

{

deformablePlane.Deform(positionToDeform);

}

}

}

}

Bake Mesh Inside Jobs

Since we have multiple meshes that can be modified during a single frame now, we can offload baking each mesh to worker threads using the job system. Luckily the docs contain an example of how to do this: Physics.BakeMesh. Let’s compare whether moving this work to worker threads is worth it.

Actually, when you call job.Schedule() Unity may launch the job on the main thread, especially if you try to Complete() it right away. Anyway, let’s compare baking via a job and the previous alternative.

| Method | Median | Dev | StdDev |

|--------------------------------|---------:|---------:|---------:|

| MeshData_BakeInJobs | 2,25 ms | 0,59 ms | 1,33 ms |

| MeshData_BakeOnMainThread | 2,23 ms | 0,83 ms | 1,85 ms |So the results are almost the same because the bake job is scheduled on the main thread indeed. In the current implementation, there is no sense in combining deform and bake jobs together, since the output of the deformation job is used to update mesh properties, and only after that, we can bake the mesh.

private void UpdateMesh(Mesh.MeshData meshData)

{

var outputIndexData = meshData.GetIndexData<ushort>();

_meshDataArray[0].GetIndexData<ushort>().CopyTo(outputIndexData);

_meshDataArray.Dispose();

meshData.subMeshCount = 1;

meshData.SetSubMesh(0,

_subMeshDescriptor,

MeshUpdateFlags.DontRecalculateBounds |

MeshUpdateFlags.DontValidateIndices |

MeshUpdateFlags.DontResetBoneBounds |

MeshUpdateFlags.DontNotifyMeshUsers);

_mesh.MarkDynamic();

Mesh.ApplyAndDisposeWritableMeshData(

_meshDataArrayOutput,

_mesh,

MeshUpdateFlags.DontRecalculateBounds |

MeshUpdateFlags.DontValidateIndices |

MeshUpdateFlags.DontResetBoneBounds |

MeshUpdateFlags.DontNotifyMeshUsers);

_mesh.RecalculateNormals();

var job = new BakeJob(_mesh.GetInstanceID());

job.Schedule().Complete();

_collider.sharedMesh = _mesh;

}

If we combine and run jobs one after another, then the mesh will be baked again at _collider.sharedMesh = _mesh as its properties would be changed when we apply mesh data. So for now I am completely fine with this as baking smaller planes don’t take much time anyway. And I don’t want to overcomplicate the system right now.

Lock Z-axis

Locking one of the axes may sound like making physics computations simpler. Firstly, I tested it with 28 balls, and it doesn’t make any difference given that the physics simulation wasn’t taking that much before anyway. So I ramped up the number of balls to 168 but the results were the same. The median was 2.26 ms vs 2.34 ms over 289 samples for the default and the locked axis scenes accordingly.

I guess it might have an impact when the amount of balls is magnitude bigger than 168, but I don’t think it is worth checking as it is outside of the game mechanics this post is about.

On the bright side, locking the axis allows removing back and front colliders, so the setup becomes simpler.

Conclusion

Multiple planes instead of one is a must-have for such mechanics in a game. It gives both great performance, as well as a higher overall plane resolution, which makes the game a lot more satisfying. This optimization technique can be applied in various cases where you can divide the work into smaller parts and discard most of it based on some condition.

The compute shader is still the fastest technique. MeshData API in this case is a bit underwhelming showing a similar result to the simple job system implementation. Maybe this mechanic just doesn’t benefit that much from using it, because some claim to have a massive performance boost after migrating to MeshData. In the end, always profile. Make sure to measure your exact use case before trying to rewrite your feature using this or any other technique. And of course profile before making any assumptions about bottlenecks in your project to not waste time optimizing something that doesn’t need it in the first place.

Alex Merzlikin

Game developer with 8+ years of experience writing about different aspects of game development from performance and optimization to game architecture and clean code. Follow my Telegram channel to get awesome posts about game dev every day.

Leave a Reply